Introduction

I have a profound enthusiasm for engaging in hackathons and contributing to open-source projects. My excitement was piqued when I learned about a particular hackathon that merged both of these passions. Initially, I delved into the intricacies of the Airbyte platform, although my lack of experience in data engineering projects posed a significant challenge. Gradually, I grasped the intricacies of the project and the advantages of the Airbyte platform. Subsequently, I determined to compose a quick start guide for novice users.

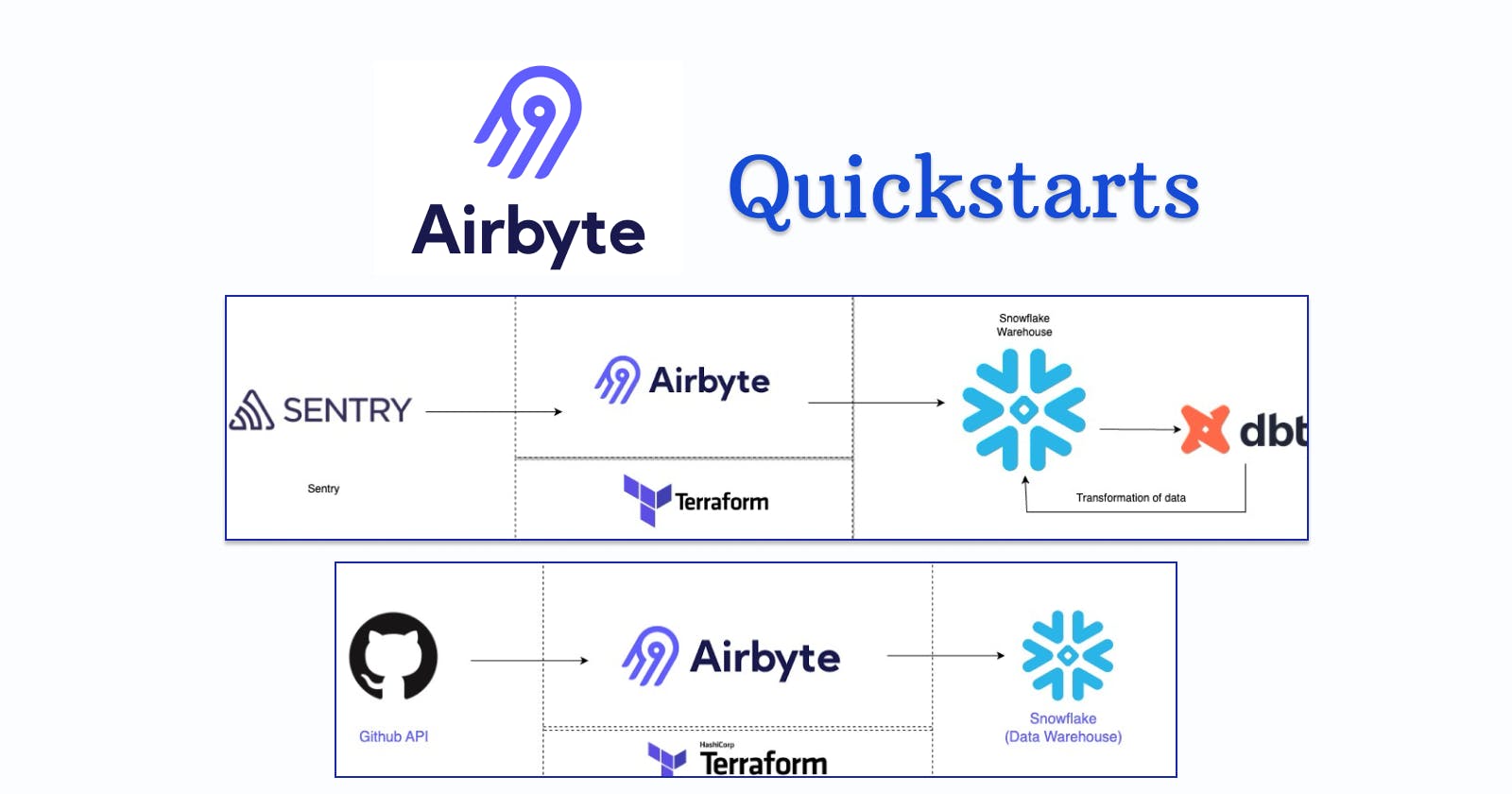

Quickstart 1: API to Warehouse

This introductory guide is designed to assist new users in efficiently extracting data from diverse APIs and transferring it to their preferred data warehouse for subsequent analysis. In this particular quickstart, I utilized the GitHub API to retrieve project and issue-related data, which was subsequently ingested into the Snowflake data warehouse.

Following is the infrastructure layout for the same.

Here is the link which includes steps to set up the quickstart.

Link: API to Warehouse

Quickstart 2: Error Resolution Optimization Stack

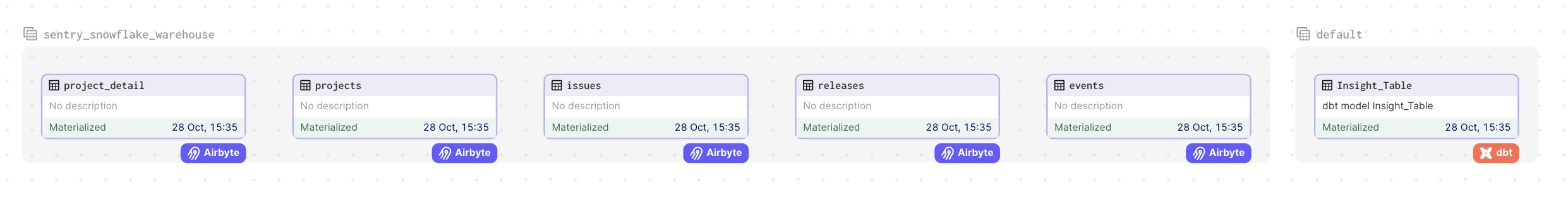

This quick start guide is designed to assist users in configuring an error analysis stack utilizing Sentry, Airbyte, Snowflake, dbt, and Dagster. Within this framework, error data extracted from Sentry is ingested into Snowflake through the use of Airbyte. Subsequently, data transformations are performed using dbt, and the results can be visually presented through Dagster.

Following is the infrastructure layout for the same.

Here is the link which includes steps to set up the quickstart.

Link: Error resolution optimization Stack

Conclusion

I wish to express my gratitude to #Airbyte and #Hashnode for orchestrating an exceptional hackathon, providing developers with valuable opportunities to enhance their expertise in open-source contributions. #Airbyte #AirbyteHackathon